AI Chatbots in Mental Health Apps: Building Empathetic Support

The global demand for mental health support is surging. According to the World Health Organization (WHO), hundreds of millions of people live with a mental disorder, yet a vast majority lack access to timely and affordable care. Traditional therapy faces significant barriers: high costs, long waiting lists, and persistent social stigma. In this landscape, technology offers a beacon of hope, and at the forefront of this digital revolution are AI-powered chatbots.

These conversational agents, integrated into mobile applications, promise 24/7, anonymous, and scalable mental health support. They can guide users through mindfulness exercises, deliver evidence-based therapeutic techniques, and offer a listening ear in moments of distress. The potential to democratize mental healthcare is immense. However, this promise is shadowed by significant ethical and technical challenges. While the overall process of how to develop a mental health app involves many stages, integrating a sophisticated AI chatbot requires special attention to technology, ethics, and user experience.

This article explores the complex journey of creating an AI chatbot for mental health. We will delve into the technological underpinnings of empathetic AI, navigate the critical ethical minefield, and outline a practical roadmap for building a system that is not only intelligent but also responsible, safe, and genuinely helpful.

The Rise of the AI Therapist: Why Chatbots Are Gaining Traction

The adoption of AI chatbots in mental health isn't just a trend; it's a response to a critical need. As healthcare systems struggle to keep pace, these digital tools are stepping in to fill the void, offering a unique combination of accessibility and privacy that resonates with modern users.

The Accessibility Imperative

For many, the path to professional mental healthcare is fraught with obstacles. AI chatbots dismantle these barriers by providing:

- 24/7 Availability: Mental health crises don't adhere to a 9-to-5 schedule. Chatbots are always available, offering support during a late-night anxiety attack or an early-morning wave of depression.

- Anonymity and Privacy: The fear of judgment prevents many from seeking help. Chatbots provide a non-judgmental space where users can express their thoughts and feelings freely, without revealing their identity.

- Affordability: The cost of traditional therapy can be prohibitive. While some chatbot services are subscription-based, many offer free or low-cost tiers, making initial support accessible to a wider audience.

- Scalability: A single human therapist can only help a limited number of people. A well-designed AI system can support millions of users simultaneously, a crucial advantage in addressing a global health crisis.

Emerging Evidence of Effectiveness

Beyond convenience, research is beginning to validate the clinical potential of therapeutic chatbots. A landmark randomized controlled trial from Dartmouth College, published in March 2025, studied a generative AI chatbot named "Therabot." The results were striking: participants with major depressive disorder saw a 51% average reduction in symptoms, while those with generalized anxiety disorder experienced a 31% reduction. (Source: Dartmouth College). Crucially, users reported a "therapeutic alliance" with the bot comparable to that with human therapists.

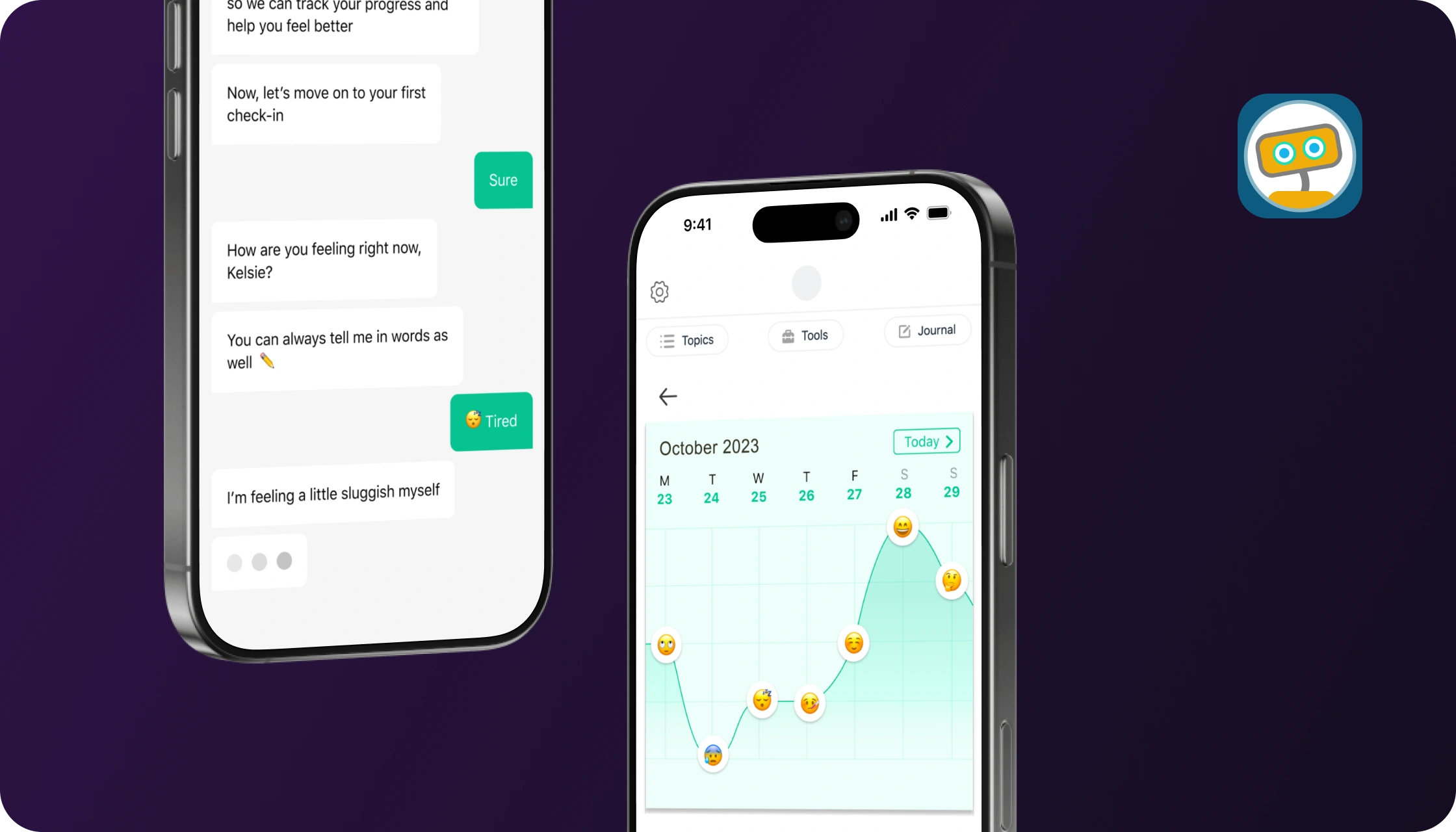

Other established apps like Woebot and Wysa have long demonstrated the power of using AI to deliver evidence-based techniques like Cognitive Behavioral Therapy (CBT). By guiding users through structured exercises, these apps help individuals identify and reframe negative thought patterns, building resilience over time.

AI chatbots like Woebot leverage established therapeutic frameworks like CBT to provide structured support.

The Architecture of Empathy: Technological Foundations

Creating a chatbot that can simulate empathy is a monumental task that sits at the intersection of computer science, linguistics, and psychology. It's not about making a machine "feel," but about designing it to respond in ways that users perceive as understanding, supportive, and helpful. This requires a sophisticated technological architecture.

From Rule-Based to Generative AI

The technology powering chatbots has evolved significantly, moving from simple scripts to complex, self-learning models.

- Rule-Based Systems: The earliest chatbots, like the famous ELIZA from the 1960s, operated on simple ";if-then" rules. They recognized keywords and responded with pre-programmed phrases. While safe and predictable, their conversations are rigid and lack depth. Many simple wellness apps still use this approach for basic guidance.

- Machine Learning (ML) & NLP: The next generation of chatbots uses Natural Language Processing (NLP) to understand the nuances of human language, including intent and sentiment. These models are trained on large datasets of conversations, allowing them to provide more flexible and relevant responses than rule-based systems.

- Large Language Models (LLMs): The current frontier is dominated by LLMs built on Transformer architecture (like the models behind ChatGPT). These systems can generate entirely new, human-like text that is contextually aware and coherent over long conversations. In healthcare settings, such models increasingly operate as AI Agents, capable of managing ongoing patient interactions, monitoring emotional cues over time, and supporting structured mental health workflows under defined clinical boundaries. Their ability to produce nuanced, seemingly empathetic dialogue has opened new possibilities for therapeutic applications.

Building the "Empathy Engine"

An effective therapeutic chatbot relies on a multi-layered system designed to interpret and respond to a user's emotional state.

- Natural Language Processing (NLP): This is the core of the system. NLP algorithms break down a user's text or speech to analyze its grammatical structure, identify key entities (people, places, events), and, most importantly, determine the user's intent. Is the user asking a question, expressing sadness, or describing a situation?

- Emotion and Sentiment Analysis: Advanced models can detect the emotional tone of a message. They can distinguish between anger, joy, sadness, and fear, often with a high degree of accuracy. This allows the chatbot to tailor its response—offering validation for sadness, calming words for anxiety, or encouragement for positive steps.

- Personalization Algorithms: A one-size-fits-all approach doesn't work in mental health. The chatbot must learn from its interactions with each user. By remembering past conversations, stated goals, and mood-tracking data, the AI can provide personalized recommendations and check in on progress, making the experience feel more like a continuous relationship.

- Response Generation: Based on the analysis of the user's input and their profile, the response generation module crafts a reply. In LLMs, this is a generative process that creates a unique response. In simpler systems, it might select the most appropriate response from a vast library. The key is to ensure the response aligns with therapeutic best practices—it should be supportive, non-judgmental, and constructive.

The Empathy Paradox: Navigating the Ethical Minefield

The very power of AI chatbots—their ability to simulate human connection—is also the source of their greatest risks. As these tools become more integrated into our lives, developers, clinicians, and users must confront a host of complex ethical challenges.

"While these results are very promising, no generative AI agent is ready to operate fully autonomously in mental health where there is a very wide range of high-risk scenarios it might encounter." - Dr. Michael Heinz, Dartmouth College

Deceptive Empathy and the Risk of Attachment

An LLM can be prompted to say "I understand how you feel" or "I'm here for you." While comforting, this is what researchers call "deceptive empathy." The bot doesn't feel; it predicts the next most probable word in a sequence. A 2025 study from Brown University highlighted how this can be harmful, creating a false sense of intimacy that could lead to unhealthy dependency or feelings of abandonment if the service is discontinued. (Source: Brown University). Some reports even describe "AI psychosis," where vulnerable individuals develop delusional beliefs from their interactions with chatbots.

Bias in the Algorithm

AI models are trained on vast amounts of text and data from the internet, which is rife with human biases. If not carefully mitigated, these biases can be amplified by the AI, leading to discriminatory or harmful outcomes.

- Racial and Cultural Bias: A 2025 study found that leading LLMs often proposed inferior treatment plans when a patient was identified as African American. They might over-diagnose certain conditions or fail to recommend appropriate medications compared to race-neutral cases.

- Gender and Identity Bias: Models can reinforce stereotypes related to gender roles or mental health conditions, potentially invalidating a user's experience or offering misguided advice.

Addressing this requires a concerted effort in "debiasing," which involves using diverse and representative training data, implementing algorithmic fairness checks, and continuous auditing of the model's outputs.

Privacy and Data Security: A Ticking Time Bomb

Conversations with a mental health chatbot contain some of the most sensitive personal data imaginable. The misuse of this data can have devastating consequences. A 2023 Mozilla Foundation report found that the majority of mental health apps had poor privacy practices. The FTC has already taken action against companies for sharing user health information with advertisers like Meta and Snapchat without consent.

Developers must prioritize privacy by design, adhering to strict regulations like HIPAA (Health Insurance Portability and Accountability Act) in the U.S. and GDPR (General Data Protection Regulation) in Europe. This includes:

- End-to-End Encryption: Securing data both in transit and at rest.

- Data Anonymization: Removing personally identifiable information (PII) before using data for analytics or model training.

- Transparent Policies: Clearly explaining to users what data is collected and how it is used, and obtaining explicit consent.

Accountability and Regulation

When a human therapist makes a mistake, there are licensing boards and legal frameworks for accountability. Who is responsible when an AI chatbot gives harmful advice? The developer? The company that deployed it? The user who misinterpreted it? This gray area is a major concern for regulators. In a sign of growing scrutiny, the U.S. Food and Drug Administration (FDA) scheduled a committee meeting for November 2025 to discuss the regulation of AI-enabled mental health devices. This signals a move toward holding these tools to higher standards of safety and efficacy, similar to other medical devices.

Privacy and regulatory compliance are paramount, especially for platforms that handle sensitive health data.

A Roadmap for Building a Responsible AI Chatbot

Developing a safe and effective mental health chatbot requires a multidisciplinary, ethics-first approach. It's not just a software project; it's a clinical and ethical undertaking.

Phase 1: Foundational Strategy and Design

- Define a Clear Clinical Purpose: Is the bot for general wellness, CBT for anxiety, or support for depression? A narrow, well-defined scope is safer and more effective than a general-purpose "AI therapist."

- Empathy-Driven Design: The user experience (UX) must be calming and intuitive. Use a soothing color palette, simple navigation, and clear language. The goal is to reduce cognitive load, not add to it.

- Assemble a Cross-Functional Team: The team must include not only AI engineers and UX designers but also licensed clinicians, ethicists, and legal experts specializing in healthcare regulations.

Phase 2: Technology and Development with a Human in the Loop

The "Human-in-the-Loop" (HITL) model is emerging as the gold standard for safety. This means that AI does not operate autonomously but works in partnership with human oversight.

The Human-in-the-Loop (HITL) Model

The HITL approach ensures that AI serves as a tool to augment, not replace, human expertise. In practice, this can mean several things:

- Content Vetting: All therapeutic content and pre-scripted responses are written or approved by licensed clinicians.

- Model Supervision: Human experts review and rate the AI's conversations to correct errors and refine its performance.

- Crisis Escalation: The AI is trained to detect signs of a crisis (e.g., suicidal ideation) and immediately escalate the user to a human crisis counselor or emergency service.

Companies like Wysa have successfully used this model to scale their services safely, ensuring a human is always available when the AI reaches its limits.

Phase 3: Building a Robust Crisis Management System

This is arguably the most critical safety feature. A mental health chatbot must have a foolproof protocol for handling emergencies. This system should be designed to be unambiguous and immediate.

| Component | Description | Implementation Example |

|---|---|---|

| Crisis Detection | The AI must be trained on specific keywords, phrases, and sentiment patterns that indicate a user is in crisis or at risk of self-harm. | Using NLP to flag phrases like "want to end my life" or a sudden, sharp drop in sentiment score. |

| Immediate Intervention | Once a crisis is detected, the bot's normal conversational flow must stop. It should present a clear, direct message. | "It sounds like you are going through a very difficult time. It's important to speak with someone who can support you right now." |

| Emergency Resources | The app must provide an unmissable "panic button" or link that connects the user to help. | Displaying a button to call a national crisis hotline (like 988 in the U.S.) or providing a direct link to a 24/7 crisis chat service. |

| Human Escalation Path | For apps with a HITL model, the system should automatically alert a human monitor or on-call therapist. | Sending an urgent notification to a human support team who can then reach out to the user or emergency services if necessary. |

Phase 4: Rigorous Testing, Launch, and Iteration

- Bias and Safety Audits: Before launch, the model must be rigorously tested for biases and failure points. Use simulated conversations representing diverse user groups and high-risk scenarios.

- Beta Testing with Real Users: Gather feedback from a controlled group of users to identify usability issues and unexpected AI behaviors.

- Post-Launch Monitoring: Continuously monitor the AI's performance, gather user feedback, and track clinical outcomes. The development process doesn't end at launch; it's an ongoing cycle of improvement.

Conclusion: The Future of AI in Mental Health is Collaborative

AI chatbots are not a panacea for the mental health crisis, nor are they a replacement for human therapists. Their true potential lies in their ability to act as a bridge—a first step for those hesitant to seek help, a supportive tool between therapy sessions, and an accessible resource for daily wellness management.

Building these systems is a profound responsibility. It demands a shift from a purely tech-driven mindset to one that is deeply rooted in clinical ethics, user safety, and human-centered design. By prioritizing transparency, embracing human oversight, and designing for the most vulnerable users, we can harness the power of AI to create conversational systems that are not just intelligent, but also wise, compassionate, and truly beneficial to human well-being.